SAN system buying guide: The fundamentals

Reading Time-approximately: 6 minutesUse this guide to determine if a SAN is right for your environment, or if you should go with DAS or NAS.

By Jacob Gsoedl

Even with the continuous use of DAS and increased use of NAS, SANs continue to provide medium- and large-sized enterprise organizations with reliable shared storage over high-speed Fibre Channel, iSCSI and Fibre Channel over Ethernet protocols.

And if you’ve ever had the pleasure of purchasing a SAN system, you know slogging through the marketing and sales speak can make getting the right system tough.

This article is the first in a series that will guide you through the SAN buying process and give you the information you need to make an educated purchase decision. This article will help you to decide if a SAN system is right for your organization, or if another storage architecture like DAS or NAS would be a better fit.

The second article will describe the critical features, performance metrics and purchasing criteria you should consider when preparing a vendor request for proposal.

The third article will compare market-leading SAN systems against purchasing criteria, as well as against each other, to give you an expert opinion to ensure your ultimate purchase is the right system for your organization.

Storage platform architectures: The basics

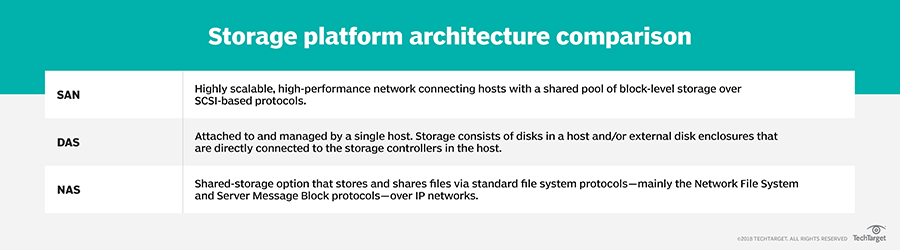

SAN is a highly scalable, high-performance network that connects hosts, usually servers, with a shared pool of block-level storage over SCSI-based protocols, including Fibre Channel (FC), iSCSI and Fibre Channel over Ethernet (FCoE).

DAS is attached to and managed by a single host. DAS storage consists of disks in a host and/or external disk enclosures that are directly connected to storage controllers in the host.

NAS is another shared-storage option that is commonly used to store and share files via standard file-system protocols — mainly the Network File System and Server Message Block protocols — over IP networks.

Arrays are the essence of a SAN system because they provide the physical storage resources. They are available as iSCSI arrays that communicate over IP networks and as FC arrays that require an FC network.

For a long time, SAN was equated with FC, but in the last decade, Ethernet-based iSCSI arrays have emerged and quickly encroached on the lucrative SAN market, first in the small- to medium-sized business space, and, with the emergence of 10 Gigabit Ethernet, in the enterprise space. An iSCSI SAN runs over IP networks and Ethernet-based network components that can be managed by traditional network administrators, significantly lowering price and complexity. In general, FC still surpasses iSCSI in reliability and robustness.

The ability to scale capacity and performance is one of the primary reasons for deploying a SAN. The SAN architecture and network, as well as the SAN arrays themselves, are designed to be highly scalable. Other reasons to deploy a SAN rather than DAS include high availability, resilience, efficiency and centralized management.

FC SANs are more complex and costly to operate than DAS and NAS systems. They require specialized knowledge, and are typically run by dedicated administrators to manage FC switches and directors, and deal with the various FC-specific configurations, including zoning, logical unit number (LUN) masking, virtual SANs and ISL-trunking to FC host bus adapter configurations.

Although iSCSI SANs have narrowed the gap, and DAS and NAS systems have become more popular with the rise of remote computing, big data analytics and unstructured data, FC SANs are usually still the technology of choice for very large and demanding networks.

Examining the scale, capacity and performance of the SAN

An FC SAN’s ability to scale capacity and performance is a primary driver for its continued popularity, and that starts with the SAN architecture and network. An FC SAN can be as simple as attaching servers directly to multi-port SAN arrays, or it can consist of multiple director-level FC switches that connect servers and SAN arrays in high-availability configurations. SAN arrays can be scaled-up (vertically) by adding additional processing power, memory, ports and disk drives.

Many contemporary SAN arrays support solid-state storage, which performs at an order of magnitude better than mechanical disk drives. Some SAN arrays leverage solid-state drives (SSD) as cache to front-end disk drives, others allow substituting mechanical disk drives with SSD and some support both.

Multiple tiers of storage, such as the combination of a fast solid-state tier and slower disk tiers, and the ability to automatically move data between different tiers of storage enable SAN arrays to scale cost efficiently.

Some SAN arrays support quality of service (QoS) features that allow some LUNs and data to have a higher priority than others, enabling oversubscription without jeopardizing critical applications. Like the support of multiple storage tiers, QoS enables cost-efficient scaling of a SAN.

Scaling a SAN array vertically has its limits; it requires moving to a higher performance array or having to add multiple arrays once the scale-up limit is reached. To avoid this, a growing list of SAN arrays support scaling-out (horizontally) by adding additional storage nodes to scale both capacity and performance at the same time.

SAN system availability and resilience

Continuous availability and resilience are other reasons for deploying SANs. Highly available SANs are designed to have no single points of failure, starting with highly available SAN arrays and switches with redundant critical components and redundant connections to the SAN network.

One of the strategies in designing redundant SANs is to connect each storage node via dual or multiple paths with the next node. To protect against unsolicited interferences, isolation techniques like zoning and LUN masking provide isolation within a SAN fabric; Cisco Systems’ Virtual SAN and Brocade’s Logical SAN protocols enable isolation across multiple SAN fabrics.

Finally, robust error handling and error management, and the ability to self-correct are critical capabilities to ensure continuous storage services.

Lowering costs with storage efficiency

Reducing total cost of ownership of storage is yet another reason for deploying SANs. The total cost of SAN storage decreases as the number of servers and amount of managed storage grow.

To start with, SANs enable high storage utilization and treat unused storage as spare capacity to support storage growth. Furthermore, most contemporary SAN systems support one or more of the following storage efficiency features to help maximize utilization of the available physical storage:

- Thin provisioning allows storage to be assigned to hosts beyond available physical capacity, and physical storage resources are allocated to a thin-provisioned LUN on an as-needed basis. The cost savings of thin provisioning can be tremendous and it enables storage utilization beyond 90%.

- Efficient clones enable LUN cloning by referencing blocks in the source LUN, and as a result, the cloned LUN uses a very small amount of physical storage. Also called zero-cost clones, they aid in reducing deployment times and required storage capacity.

- Data deduplication reclaims storage by identifying duplicate blocks of data and replacing them with a reference, usually a unique hash code, to unique data blocks.

- Data compression reduces the amount of required storage by applying compression algorithms while data is written and decompressing data when it is read.

Centralized storage improves management

Unlike DAS, where storage is managed separately on each server, a SAN provides for a centralized mechanism and place to provision storage, analyze storage usage and performance, and perform storage configurations. Central management also simplifies the governance of storage infrastructure and enables compliance with service-level agreements and regulatory requirements.

Some contemporary SAN arrays support heterogeneous storage virtualization to combine smaller storage arrays into larger virtual storage pools that are managed under the same SAN umbrella. Last but not least, SANs simplify data protection of storage with features like snapshots that enable restores to prior points in time, and data replication to copy data to other arrays and sites.

SAN vs. DAS

Without a question, shared storage that’s accessed over a network is appealing, but does it justify the additional expenditure and complexity inherent to SANs? After all, servers ship with storage and, with the availability of very large disk drives, a server can be stuffed with tens and even hundreds of terabytes of DAS at a very reasonable cost.

One of the big challenges of DAS is that it’s only accessible by a single host, and as a result only a fraction of the available storage is usually in use. The larger the number of servers, the more unused DAS storage capacity you’ll end up with, resulting in dismal overall storage utilization. While 70% to more than 90% storage utilization is very common in SAN storage, DAS storage utilization is usually a fraction of that.

The other challenge with DAS is storage management. From provisioning, monitoring and backup to reporting, storage directly attached to servers is managed individually. The larger the number of servers, the more difficult DAS becomes to effectively manage.

In environments without shared storage, the quality of storage management is typically in direct relation to the relevance of servers and applications on those servers. Besides the challenge of having to manage each server’s storage individually, DAS lacks advanced storage management features that are common in SAN arrays, such as snapshots, replication and thin provisioning.

SAN vs. NAS

NAS systems share many of the storage management features of a SAN, including snapshots, replication and thin provisioning, and NAS systems are usually simpler to manage than SANs. If you primarily store and share unstructured data, a NAS system would be a better storage platform than a SAN.

But pure NAS systems are ill-suited for applications that require block-level storage access. In an attempt to get a piece of the lucrative SAN market, some NAS vendors, most noticeably NetApp, have added block-level protocol support to their NAS filers.

Likewise, SAN vendors have been adding file-system protocol support to their SAN platforms either natively or via NAS gateways. Today, an increasing number of shared storage platforms support both block- and file-level protocols.

In general, a SAN is more versatile than NAS. While it’s relatively easy to share block-level storage as a file share, it’s significantly more challenging to add block-level protocol support to a pure NAS.

Conclusion

SANs, especially FC SANs, are more complex than DAS and NAS, and should be deployed for the right reasons. They are used for applications that require shared block-level storage, such as certain clustered applications and databases, but their main use is for storage consolidation.

Jacob Gsoedl asks:

What is your main criteria for deciding between a SAN, NAS or DAS?

Join the Discussion

Storage consolidated in a SAN enables more efficient use and management of storage, especially in environments with a large number of nodes. Consolidation demands scale and robustness, and this is where a SAN system excels. Better storage utilization and improved storage management result in substantial cost savings. Additionally, a SAN creates a clean separation between storage and other IT services. Even though this may create an additional management layer, the resulting focus yields better storage services — increased security, improved performance and higher availability.